Best Practices for Financial Data Storage

1. Introduction

Proper database partitioning can significantly reduce system response latency and increase data throughput. A well-designed partitioning strategy should consider various factors such as the characteristics of data distribution, typical query and computation scenarios, and the frequency of data updates.

This article provides general partitioning strategies and scripts for common types of financial data to help new DolphinDB users create appropriate databases and tables.

All scripts in this article are compatible with DolphinDB server version 2.00.10 or higher.

2. Overview of Storage Strategies

The storage strategies are divided into two categories: market data storage and factor storage.

2.1. Market Data Storage

The following partitioning strategies are recommended for various types of market data:

| Instrument Category | Dataset | Storage Engine | Partitioning Strategy | Partition Columns | Sort Columns |

|---|---|---|---|---|---|

| Stocks | Daily OHLC | OLAP | By year | Trade time | None |

| Stocks | 1-Minute OHLC | OLAP | By day | Trade time | None |

| Options | Snapshot | TSDB | By day + HASH20(option code) | Trade date + Option code | Option code + Receive time |

| Futures | Snapshot | TSDB | By day + HASH10(futures code) | Trade date + Futures code | Futures code + Receive time |

| Futures | Daily OHLC | OLAP | By year | Trade time | None |

| Futures | 1-Minute OHLC | OLAP | By day | Trade time | None |

| Interbank Bonds | ESP Quote | TSDB | By day | Creation date | Bond code + Creation time |

| Interbank Bonds | ESP Trade | TSDB | By day | Creation date | Bond code + Creation time |

| Interbank Bonds | QB Quote | TSDB | By day | Market time | Bond code + Market time |

| Interbank Bonds | QB Trade | TSDB | By day | Market time | Bond code + Market time |

2.2. Narrow Format Table for Factor Storage

Factor mining is an essential component of quantitative trading. With the growing scale of quantitative trading and AI model training, practitioners must handle large volumes of factor data. Efficient storage of such data is crucial.

There are two main formats for storing factor data - narrow and wide:

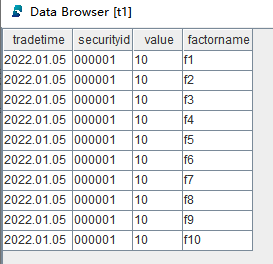

- The narrow format table has four columns, holding timestamps, security IDs,

factor names and factor values, as shown in the image 2-1.

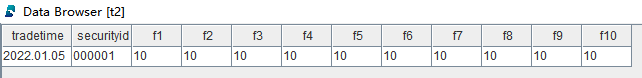

Figure 1. Image 2-1 Narrow Format Table - The wide format has a separate column for each factor, as shown in the image

2-2.

Figure 2. Image 2-2 Wide Format Table

We recommend using a narrow format table to store factor data. Compared to the wide format, the narrow format offers the following advantages:

- More efficient deletion of expired or deprecated factors

In a narrow format table, deleting a specific factor involves deleting certain rows concentrated in one or several partitions, which makes the operation highly efficient.

- More efficient addition of new factors

In a wide format table, adding a factor involves adding a column and updating it, which is less efficient. In contrast, in a narrow format table, adding a factor only requires inserting rows.

- More efficient updates of specific factors by name

A wide format table requires updating an entire column, whereas a narrow format table updates only relevent rows.

- Optimized multi-factor query performance

Using the PIVOT BY statement, narrow data can be transformed into the panel format, where each row represents a unique combination of a timestamp and a security ID, and each factor is presented in a separate column. Parallel optimization of the

PIVOT BYstatement ensures efficient multi-factor queries with the narrow format.

The following partitioning strategies are recommended for storing factor data with 5,000 symbols at various frequencies, using the TSDB storage engine:

| Instrument Category | Factor Frequency | Partitioning Strategy | Partition Columns | Sort Columns | Reduced Sort Key Count |

|---|---|---|---|---|---|

| Stocks | Daily | By year + factor name | Trade time + Factor name | Stock code + Trade time | 500 |

| Stocks | 30-minute | By year + factor name | Trade time + Factor name | Stock code + Trade time | 500 |

| Stocks | 10-minute | By month + factor name | Trade time + Factor name | Stock code + Trade time | 500 |

| Stocks | 1-minute | By day + factor name | Trade time + Factor name | Stock code + Trade time | 500 |

| Stocks | 1-second | By hour + factor name | Trade time + Factor name | Stock code + Trade time | 500 |

| Stocks | Level-2 snapshot (3–5s) | By day + factor name | Trade time + Factor name | Stock code + Trade time | 500 |

| Stocks | Level-2 tick-by-tick | By hour + factor name + HASH10(stock code) | Trade time + Factor name + Stock code | Stock code + Trade time | No reduction |

| Futures | 500-mili | By day + factor name | Trade time + Factor name | Futures code + Trade time | 500 |

3. Examples for Market Data Storage

Based on the partitioning strategies discussed in the previous chapter, this chapter provides the corresponding DLang scripts.

3.1. Stock Data

3.1.1. Daily OHLC

create database "dfs://ohlc_day_level"

partitioned by RANGE(2000.01M + (0..30)*12)

engine='OLAP'

create table "dfs://ohlc_day_level"."ohlc_day"(

securityid SYMBOL

tradetime TIMESTAMP

open DOUBLE

close DOUBLE

high DOUBLE

low DOUBLE

vol INT

val DOUBLE

vwap DOUBLE

)

partitioned by tradetimeThe script creates the database and table for storing daily OHLC data, using the OLAP storage engine and partitioned by year. Each partition contains daily OHLC data for all stocks within that year.

3.1.2. 1-Minute OHLC

create database "dfs://ohlc_minute_level"

partitioned by VALUE(2020.01.01..2021.01.01)

engine='OLAP'

create table "dfs://ohlc_minute_level"."ohlc_minute"(

securityid SYMBOL

tradetime TIMESTAMP

open DOUBLE

close DOUBLE

high DOUBLE

low DOUBLE

vol INT

val DOUBLE

vwap DOUBLE

)

partitioned by tradetimeThe script creates the database and table for storing 1-minute OHLC data, using the OLAP storage engine and partitioned by day. Each partition contains 1-minute OHLC data for all stocks on that day.

3.2. Option Data

create database "dfs://options"

partitioned by VALUE(2020.01.01..2021.01.01), HASH([SYMBOL, 20])

engine='TSDB'

create table "dfs://options"."options"(

TradingDay DATE[comment="trade date", compress="delta"]

ExchangeID SYMBOL

LastPrice DOUBLE

PreSettlementPrice DOUBLE

PreClosePrice DOUBLE

PreOpenInterest DOUBLE

OpenPrice DOUBLE

HighestPrice DOUBLE

LowestPrice DOUBLE

Volume INT

Turnover DOUBLE

OpenInterest DOUBLE

ClosePrice DOUBLE

SettlementPrice DOUBLE

UpperLimitPrice DOUBLE

LowerLimitPrice DOUBLE

PreDelta DOUBLE

CurrDelta DOUBLE

UpdateTime SECOND

UpdateMillisec INT

BidPrice DOUBLE[]

BidVolume INT[]

AskPrice DOUBLE[]

AskVolume INT[]

AveragePrice DOUBLE

ActionDay DATE

InstrumentID SYMBOL

ExchangeInstID STRING

BandingUpperPrice DOUBLE

BandingLowerPrice DOUBLE

tradeTime TIME

receivedTime NANOTIMESTAMP

perPenetrationTime LONG

)

partitioned by TradingDay, InstrumentID,

sortColumns=[`InstrumentID,`ReceivedTime],

keepDuplicates=ALLThe script creates the database and table for storing option snapshot data, using

the TSDB storage engine and composite partitioned by day and the hash value of

InstrumentID. The data is sorted by

InstrumentIDand receivedTime columns.

3.3. Futures Data

3.3.1. Snapshots

create database "dfs://futures"

partitioned by VALUE(2020.01.01..2021.01.01), HASH([SYMBOL, 10])

engine='TSDB'

create table "dfs://futures"."futures"(

TradingDay DATE[comment="trade date", compress="delta"]

ExchangeID SYMBOL

LastPrice DOUBLE

PreSettlementPrice DOUBLE

PreClosePrice DOUBLE

PreOpenInterest DOUBLE

OpenPrice DOUBLE

HighestPrice DOUBLE

LowestPrice DOUBLE

Volume INT

Turnover DOUBLE

OpenInterest DOUBLE

ClosePrice DOUBLE

SettlementPrice DOUBLE

UpperLimitPrice DOUBLE

LowerLimitPrice DOUBLE

PreDelta DOUBLE

CurrDelta DOUBLE

UpdateTime SECOND

UpdateMillisec INT

BidPrice DOUBLE[]

BidVolume INT[]

AskPrice DOUBLE[]

AskVolume INT[]

AveragePrice DOUBLE

ActionDay DATE

InstrumentID SYMBOL

ExchangeInstID STRING

BandingUpperPrice DOUBLE

BandingLowerPrice DOUBLE

tradeTime TIME

receivedTime NANOTIMESTAMP

perPenetrationTime LONG

)

partitioned by TradingDay, InstrumentID,

sortColumns=[`InstrumentID,`ReceivedTime],

keepDuplicates=ALLThe script creates the database and table for storing futures snapshot data,

using the TSDB storage engine and composite partitioned by day and the hash

value of InstrumentID. The data is sorted by

InstrumentIDand receivedTime

columns.

3.3.2. Daily OHLC

create database "dfs://ohlc_day_level"

partitioned by RANGE(2000.01M + (0..30)*12)

engine='OLAP'

create table "dfs://ohlc_day_level"."ohlc_day"(

securityid SYMBOL

tradetime TIMESTAMP

open DOUBLE

close DOUBLE

high DOUBLE

low DOUBLE

vol INT

val DOUBLE

vwap DOUBLE

)

partitioned by tradetimeThe script creates the database and table for storing daily futures OHLC data, using the OLAP storage engine and partitioned by year. Each partition contains daily OHLC data for all contracts within that year.

3.3.3. 1-Minute OHLC

create database "dfs://ohlc_minute_level"

partitioned by VALUE(2020.01.01..2021.01.01)

engine='OLAP'

create table "dfs://ohlc_minute_level"."ohlc_minute"(

securityid SYMBOL

tradetime TIMESTAMP

open DOUBLE

close DOUBLE

high DOUBLE

low DOUBLE

vol INT

val DOUBLE

vwap DOUBLE

)

partitioned by tradetimeThe script creates the database and table for storing minute OHLC data, using the OLAP storage engine and partitioned by day. Each partition contains minute OHLC data for all contracts on that day.

3.4. Interbank Bonds

3.4.1. ESP Quotes

create database "dfs://ESP"

partitioned by VALUE(2023.01.01..2023.12.31)

engine='TSDB'

create table "dfs://ESP"."ESPDepthtable"(

createDate DATE[comment="creation time", compress="delta"]

createTime TIME[comment="creation time", compress="delta"]

bondCodeVal SYMBOL

marketDepth LONG

marketIndicator LONG

mdBookType LONG

mdSubBookType LONG

messageId LONG

messageSource STRING

msgSeqNum LONG

msgType STRING

askclearingMethod LONG[]

bidclearingMethod LONG[]

askdeliveryType LONG[]

biddeliveryType LONG[]

askinitAccountNumSixCode LONG[]

bidinitAccountNumSixCode LONG[]

asklastPx DOUBLE[]

bidlastPx DOUBLE[]

askmdEntryDate DATE[]

bidmdEntryDate DATE[]

askmdEntrySize LONG[]

bidmdEntrySize LONG[]

askmdEntryTime TIME[]

bidmdEntryTime TIME[]

askmdQuoteType LONG[]

bidmdQuoteType LONG[]

askquoteEntryID LONG[]

bidquoteEntryID LONG[]

asksettlType LONG[]

bidsettlType LONG[]

askyield DOUBLE[]

bidyield DOUBLE[]

ask1initPartyTradeCode STRING

bid1initPartyTradeCode STRING

ask2initPartyTradeCode STRING

bid2initPartyTradeCode STRING

ask3initPartyTradeCode STRING

bid3initPartyTradeCode STRING

ask4initPartyTradeCode STRING

bid4initPartyTradeCode STRING

ask5initPartyTradeCode STRING

bid5initPartyTradeCode STRING

ask6initPartyTradeCode STRING

bid6initPartyTradeCode STRING

ask7initPartyTradeCode STRING

bid7initPartyTradeCode STRING

ask8initPartyTradeCode STRING

bid8initPartyTradeCode STRING

ask9initPartyTradeCode STRING

bid9initPartyTradeCode STRING

ask10initPartyTradeCode STRING

bid10initPartyTradeCode STRING

securityID SYMBOL

securityType STRING

senderCompID STRING

senderSubID STRING

sendingTime TIMESTAMP

symbol STRING

)

partitioned by createDate,

sortColumns=[`securityID, `createTime]The script creates the database and table for storing ESP quote data, using

the TSDB storage engine and partitioned by day. The data is sorted by

securityID and createTime columns.

Each partition contains quote data for all bonds on that day.

3.4.2. ESP Trade

create database "dfs://ESP"

partitioned by VALUE(2023.01.01..2023.12.31)

engine='TSDB'

create table "dfs://ESP"."ESPTradetable"(

createDate DATE[comment="creation time", compress="delta"]

createTime TIME[comment="creation time", compress="delta"]

securityID SYMBOL

execId STRING

execType STRING

lastQty LONG

marketIndicator LONG

messageSource STRING

msgSeqNum LONG

msgType STRING

price DOUBLE

senderCompID STRING

stipulationType STRING

stipulationValue DOUBLE

symbol STRING

tradeDate DATE

tradeMethod LONG

tradeTime TIME

tradeType LONG

transactTime TIMESTAMP

)

partitioned by createDate,

sortColumns=[`securityID, `createTime]The script creates the database and table for storing ESP trade data, using

the TSDB storage engine and partitioned by day. The data is sorted by

securityID and createTime columns.

Each partition contains trade data for all bonds on that day.

3.4.3. QB Quotes

create database "dfs://QB_QUOTE"

partitioned by VALUE(2023.10.01..2023.10.31)

engine='TSDB'

create table "dfs://QB_QUOTE"."qbTable"(

SENDINGTIME TIMESTAMP

CONTRIBUTORID SYMBOL

MARKETDATATIME TIMESTAMP

SECURITYID SYMBOL

BONDNAME SYMBOL

DISPLAYLISTEDMARKET SYMBOL

BIDQUOTESTATUS INT

BIDYIELD DOUBLE

BIDPX DOUBLE

BIDPRICETYPE INT

BIDPRICE DOUBLE

BIDDIRTYPRICE DOUBLE

BIDVOLUME INT

BIDPRICEDESC STRING

ASKQUOTESTATUS INT

ASKYIELD DOUBLE

OFFERPX DOUBLE

ASKPRICETYPE INT

ASKPRICE DOUBLE

ASKDIRTYPRICE DOUBLE

ASKVOLUME INT

ASKPRICEDESC STRING

)

partitioned by MARKETDATATIME,

sortColumns=[`SECURITYID,`MARKETDATATIME]The script creates the database and table for storing QB quote data, using

the TSDB storage engine and partitioned by day. The data is sorted by

SECURITYID and MARKETDATATIME columns.

Each partition contains quote data for all bonds on that day.

3.4.4. QB Trade

create database "dfs://QB_TRADE"

partitioned by VALUE(2023.10.01..2023.10.31)

engine='TSDB'

create table "dfs://QB_TRADE"."lastTradeTable"(

SECURITYID SYMBOL

BONDNAME SYMBOL

SENDINGTIME TIMESTAMP

CONTRIBUTORID SYMBOL

MARKETDATATIME TIMESTAMP

MODIFYTIME SECOND

DISPLAYLISTEDMARKET SYMBOL

EXECID STRING

DEALSTATUS INT

TRADEMETHOD INT

YIELD DOUBLE

TRADEPX DOUBLE

PRICETYPE INT

TRADEPRICE DOUBLE

DIRTYPRICE DOUBLE

SETTLSPEED STRING

)

partitioned by MARKETDATATIME,

sortColumns=[`SECURITYID,`MARKETDATATIME]The script creates the database and table for storing QB trade data, using

the TSDB storage engine and partitioned by day. The data is sorted by

SECURITYID and MARKETDATATIME columns.

Each partition contains trade data for all bonds on that day.

4. Narrow Format Table for Factor Storage

4.1. 1-Day Factor Library

create database "dfs://dayFactorDB"

partitioned by RANGE(date(datetimeAdd(1980.01M,0..80*12,'M'))), VALUE(`f1`f2),

engine='TSDB'

create table "dfs://dayFactorDB"."dayFactorTB"(

tradetime DATE[comment="time column", compress="delta"],

securityid SYMBOL,

value DOUBLE,

factorname SYMBOL

)

partitioned by tradetime, factorname,

sortColumns=[`securityid, `tradetime],

keepDuplicates=ALL,

sortKeyMappingFunction=[hashBucket{, 500}]The script creates the database and table for storing factors calculated at every

day, using the TSDB storage engine and composite partitioned by day and factor

name. The data is sorted by securityid and

tradetime columns.

The TSDB engine supports specifyingsortColumns used to sort and index the

data within each partition. If multiple columns are specified, the last column

must be either a temporal column or an integer column. The preceding columns are

used as the sort keys. This allows the engine to quickly locate records within a

partition. Except for the last element of sortColumns, the preceding

elements—collectively referred to as the SortKey—can be specified as the columns

that are frequently used as filtering conditions in queries. As a rule of thumb,

the number of SortKey entries should not exceed 1,000 per partition for optimal

performance. The sortKeyMappingFunction parameter can be used to reduce

the dimensionality of SortKey entries when needed. Based on our tests, we found

the optimal configuration for daily factor storage is to sort partitions by the

securityid and tradetime columns with

sortKeyMappingFunction set to 500.

4.2. 30-Minute Factor Library

create database "dfs://halfhourFactorDB"

partitioned by RANGE(date(datetimeAdd(1980.01M,0..80*12,'M'))), VALUE(`f1`f2),

engine='TSDB'

create table "dfs://halfhourFactorDB"."halfhourFactorTB"(

tradetime TIMESTAMP[comment="time column", compress="delta"],

securityid SYMBOL,

value DOUBLE,

factorname SYMBOL,

)

partitioned by tradetime, factorname,

sortColumns=[`securityid, `tradetime],

keepDuplicates=ALL,

sortKeyMappingFunction=[hashBucket{, 500}]The script creates the database and table for storing factors calculated on a

30-minute basis, using the TSDB storage engine and composite partitioned by year

and factor name. The data is sorted by securityid and

tradetime columns.

4.3. 10-Minute Factor Library

create database "dfs://tenMinutesFactorDB"

partitioned by VALUE(2023.01M..2023.06M),

VALUE(`f1`f2),

engine='TSDB'

create table "dfs://tenMinutesFactorDB"."tenMinutesFactorTB"(

tradetime TIMESTAMP[comment="time column", compress="delta"],

securityid SYMBOL,

value DOUBLE,

factorname SYMBOL

)

partitioned by tradetime, factorname,

sortColumns=[`securityid, `tradetime],

keepDuplicates=ALL,

sortKeyMappingFunction=[hashBucket{, 500}]The script creates the database and table for storing factors calculated on a

10-minute basis, using the TSDB storage engine and composite partitioned by

month and factor name. The data is sorted by the securityid and

tradetime columns.

4.4. 1-Minute Factor Library

create database "dfs://minuteFactorDB"

partitioned by VALUE(2012.01.01..2021.12.31), VALUE(`f1`f2),

engine='TSDB'

create table "dfs://minuteFactorDB"."minuteFactorTB"(

tradetime TIMESTAMP[comment="time column", compress="delta"],

securityid SYMBOL,

value DOUBLE,

factorname SYMBOL

)

partitioned by tradetime, factorname,

sortColumns=[`securityid, `tradetime],

keepDuplicates=ALL,

sortKeyMappingFunction=[hashBucket{, 500}]The script creates the database and table for storing factors calculated on a

1-minute basis, using the TSDB storage engine and composite partitioned by day

and factor name. The data is sorted by the securityid and

tradetime columns.

4.5. 1-Second Factor Library

create database "dfs://secondFactorDB"

partitioned by VALUE(datehour(2022.01.01T00:00:00)..datehour(2022.01.31T00:00:00)), VALUE(`f1`f2),

engine='TSDB'

create table "dfs://secondFactorDB"."secondFactorTB"(

tradetime TIMESTAMP[comment="time column", compress="delta"],

securityid SYMBOL,

value DOUBLE,

factorname SYMBOL

)

partitioned by tradetime, factorname,

sortColumns=[`securityid, `tradetime],

keepDuplicates=ALL,

sortKeyMappingFunction=[hashBucket{, 500}]The script creates the database and table for storing factors calculated on a

1-second basis, using the TSDB storage engine and composite partitioned by hour

and factor name. The data is sorted by the securityid and

tradetime columns.

4.6. Level-2 Snapshot Factor Library

create database "dfs://level2FactorDB" partitioned by VALUE(2022.01.01..2022.12.31), VALUE(["f1", "f2"]),

engine='TSDB'

create table "dfs://level2FactorDB"."level2FactorTB"(

tradetime TIMESTAMP[comment="time column", compress="delta"],

securityid SYMBOL,

value DOUBLE,

factorname SYMBOL

)

partitioned by tradetime, factorname,

sortColumns=[`securityid, `tradetime],

keepDuplicates=ALL,

sortKeyMappingFunction=[hashBucket{, 500}]The script creates the database and table for storing factors calculated based on

the level 2 snapshot data, using the TSDB storage engine and composite

partitioned by day and factor name. The data is sorted by the

securityid and tradetime columns.

4.7. Tick-by-Tick Factor Library

create database "dfs://tickFactorDB" partitioned by VALUE(2022.01.01..2022.12.31), VALUE(["f1", "f2"]), HASH([SYMBOL,10])

engine='TSDB'

create table "dfs://tickFactorDB"."tickFactorTB"(

tradetime TIMESTAMP[comment="time column", compress="delta"],

securityid SYMBOL,

value DOUBLE,

factorname SYMBOL

)

partitioned by tradetime, factorname,securityid,

sortColumns=[`securityid, `tradetime],

keepDuplicates=ALLThe script creates the database and table for storing factors calculated based on

the tick-by-tick data, using the TSDB storage engine and composite partitioned

by day, factor name, and the hash value of securityid. Since

each partition contains approximately 500 stocks, there is no need to specify

the sortKeyMappingFunction parameter. The data is sorted by the

securityid and tradetime columns.

4.8. 500-Millisecond Futures Factor Library

create database "dfs://futuresFactorDB"

partitioned by VALUE(2022.01.01..2023.01.01), VALUE(["f1", "f2"]),

engine='TSDB'

create table "dfs://futuresFactorDB"."futuresFactorTB"(

tradetime TIMESTAMP[comment="time column", compress="delta"],

securityid SYMBOL,

value DOUBLE,

factorname SYMBOL

)

partitioned by tradetime, factorname,

sortColumns=[`securityid, `tradetime],

keepDuplicates=ALL,

sortKeyMappingFunction=[hashBucket{, 500}]The script creates the database and table for storing factors calculated at every

500 milliseconds, using the TSDB storage engine and composite partitioned by day

and factor name. The data is sorted by the securityid and

tradetime columns.

5. Conclusion

For common types of financial data, we provide best-practice recommendations for database and table creation. Users can further customize parameters to meet specific needs.

Further Reading:

6. FAQ

Error When Creating a Database: Duplicate Database Name

When creating a database, you may encounter the following error:

create database partitioned by VALUE(["f1","f2"]), engine=TSDB => It is not allowed to overwrite an existing database.Solution:

Use a different database name or delete the existing one.

Drop an existing database:

dropDatabase("dfs://dayFactorDB")Check whether a database exists:

existsDatabase("dfs://dayFactorDB")